In this post, we delve into the details of the data availability problem and how it can impact scaling on Ethereum.

What is the Data Availability problem?

The Data Availability (DA) problem: How can peers in a blockchain network be sure that all the data of a newly proposed block is actually available? If the data is not available, the block might contain malicious transactions which are being hidden by the block producer. Even if the block contains non-malicious transactions, hiding them might compromise the security of the system.

To provide an example, suppose Alice is an operator of a ZK-Rollup (ZKR). She submits a ZK-Proof on Ethereum which is verified. If she does not submit all the transactional data on Ethereum, although her proof proves that all state transitions taken in the rollup are valid, still users of the rollup might be in the dark about their current account balances. The submitted proof sheds no light on the current states because of the Zero-Knowledge nature of it.

An analogous example exists in the Optimistic Rollup (OPR) setting, where Alice submits an assertion on Ethereum, but none of the participants of the OPR can challenge it because the transactional data is not available and hence they are unable to recalculate or challenge the assertion.

To counter the above scenarios, both OPR and ZKR designs mandate operators to submit all transactional details on Ethereum as ‘calldata’. While this makes them evade the DA problem in the short term, as the number of transactions grows inside rollups, the amount of data that needs to be submitted would also grow, limiting the amount of scaling that these rollups can offer.

To make matters worse, data unavailability is a not uniquely attributable fault. This means that participants cannot prove to other peers that a particular chunk of data is missing. This is because Bob can broadcast that the block submitted by Alice has missing data, but when Charlie queries Alice, she may provide the data to him.

How does this affect a blockchain today?

To answer this question, let us first revisit the general block structure of an Ethereum-like blockchain and the types of clients that exist on any blockchain network.

A block can be divided into two main parts:

Block Header: A small block header contains the digest and metadata related to the transactions included in the block.

Block Body: This contains all the transactional data and makes up the majority of the block size.

In conventional blockchain protocols, all nodes are considered as full nodes that sync up the entire block and verify all state transitions. They need to spend a considerable amount of resources to check transactional validity and store the blocks. On the upside, these nodes cannot be made to accept any invalid transaction.

There might be another class of nodes that do not have (or do not want to spend) resources to verify every transaction. Instead, they are mainly interested in knowing the current state of the blockchain and whether some transactions, which are relevant to them, are included in the chain or not. Ideally, these light clients should also be protected from following a chain that contains invalid transactions. This is actually possible using so-called fraud proofs. These are succinct messages that show that a particular block body includes a transaction that is invalid. Any full node can produce such a fraud-proof, and the light client thus does not have to trust that a particular full node is honest. They just have to make sure that they are well connected to a gossip network that ensures that if there is fraud-proof available for a block header, they will receive it.

However, there is one problem with this system: what if a block producer does not reveal the entire data behind a block. In this case, full nodes will obviously reject the block as in their view, it isn’t even a block if it doesn’t come with the block body. Light clients, however, can be presented with the header chain and have no way of noticing that the data is missing. At the same time, full nodes can’t produce fraud proofs, because they would be missing the data that’s necessary to create fraud proofs.

To counter this, we need a mechanism for light clients to verify data availability. This would ensure that a block producer hiding data cannot get away by convincing a light client otherwise. It would also force the block producer to reveal parts of the data, making the entire network have access to the entire block in a collaborative manner.

Let us dive a bit deeper into the problem with the help of an example. Suppose the block producer Alice constructs a block B with transactions tx1, tx2, …, txn. Let us suppose tx1 is a malicious transaction. If tx1 is broadcasted, any full node can verify it is malicious and send this to a light client as a fraud-proof who would immediately know that the block is unacceptable. However, if Alice wants to hide tx1, she reveals the header and all transactional data except tx1. The full nodes cannot verify the correctness of tx1.

One might think a simple solution is if all light clients simply sample the transactions at random, and if they find their samples to be available, they can be confident that the block is available. Let the light nodes query for any one transaction, uniformly at random. The probability that the light client queries tx1 is 1/n. So, with an overwhelming probability, Alice is able to fool the light clients into accepting a malicious transaction. In other words, most light clients will be fooled. Because of the non-attributable nature, full nodes cannot prove in any manner that tx1 is not available. Unfortunately, increasing the number of samples does not make this much better.

So, what do we do about this?

The solution to this problem lies in introducing redundancy into a block. There exists a rich set of literature on coding theory in general, and erasure coding in particular, which can help us with this problem.

In a nutshell, erasure coding allows us to extend any n chunks of data into 2n chunks of data in such a way that any n out of 2n are sufficient to reconstruct the original piece of data (the parameters are tunable, but here we consider this for simplicity).

If we force the block producer to erasure code the transactions tx1, tx2, …, txn, then, to hide a single transaction, it would need to hide n+1 data chunks as any n are sufficient to construct the entire transaction set. In this case, a constant number of queries give the light client very high confidence that the underlying data is indeed available.

Woah, so this is it?

No. Although this simple trick makes the job of hiding more difficult, it is still possible that the block producer just intentionally performs the erasure coding in a wrong manner. However, a full node can verify whether this erasure coding was correctly done and if not, it can prove this to a light client. This is another type of fraud-proof, just like in the case of malicious transactions above. Interestingly, there needs to be a single honest full node neighbor of a light client for it to be certain that if the block is malicious, then it will receive a fraud-proof. This ensures that the light client has access to a chain with no malicious transaction with a very high probability.

But there is a catch. If done naively, the size of some fraud proofs can be in the order of the size of the block itself. The resource assumption we had on the light client prohibits us from using such a design. There have been improvements in this regard by using multi-dimensional erasure coding techniques that reduce the size of the fraud proofs at the cost of commitment size. For sake of brevity, we do not cover these but this paper has a detailed analysis of it.

The problem with fraud-proof-based solutions is that the light clients are never completely sure about any block for which it has not yet received a fraud-proof. Also, they continuously trust its full node peers to be honest. Honest nodes also need to be incentivized to continuously keep auditing blocks.

We focussed our attention here on systems that guarantee if the block encoding is invalid, full nodes can detect it and provide proof to light clients that convince them of misbehavior. However, in the next section, we will look at block encodings which guarantee that only valid encodings can be committed into the chain. This obliterates the need for fraud proofs that prove encoding errors. These validity proof-based solutions enable applications to use the system without having to wait for these kinds of fraud proofs from full nodes.

So how do these solutions work?

Recently, polynomial commitments have seen reinvigorated interest from the blockchain space. These polynomial commitments, especially the constant-sized KZG/Kate commitments to polynomials, can be used to design a neat DA scheme without the need for fraud proofs. In short, Kate commitments allow us to commit to a polynomial using a single elliptic curve group element. Moreover, the scheme supports us to prove that at some point i the polynomial φ evaluates to φ(i) using a constant-sized witness. The commitment scheme is computationally binding and is also homomorphic, allowing us to neatly avoid fraud proofs.

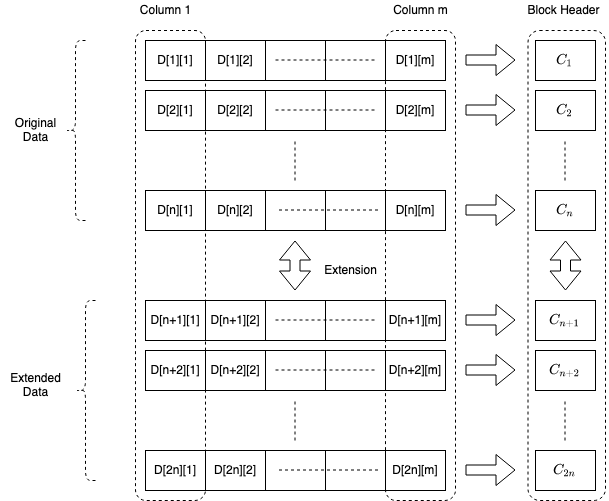

We force the block producer to take the original transactional data and arrange it in a 2D matrix of size n x m. It uses polynomial interpolation to extend each column of size n into columns of size 2n. Each row of this extended matrix generates a polynomial commitment and sends these commitments as part of the block header. A schematic representation of the block is given below.

The light clients query any cell of this extended matrix to get the witness which enables it to immediately verify it against the block header. The constant-sized membership proofs make the sampling extremely efficient. The homomorphic nature of commitment makes sure that the proof verifies only if the block is constructed correctly and the polynomial interpolation ensures that a constant number of successful samples means that the data is available with a very high probability.

A schematic representation of the block

The finer details of the scheme along with further optimizations and cost estimates are beyond the scope of this article. However, we would like to point out that although we discuss a 2D scheme here, similar guarantees can be provided with a 1D scheme as well, which has a smaller header size at the cost of less parallelism and light client sampling efficiency. We will delve deeper into it in follow-up articles.

What are the other alternatives and what next?

The higher dimensional erasure code and Kate commitments are not the only ways to approach the DA problem. There are other ways that we skipped here like Coded Merkle Trees, Coded Interleaving Tree, FRI, and STARK-based approaches, but each has its merits and demerits.

At Polygon, we have been working on a Data Availability solution using Kate commitments. In later posts, we will cover the implementation details, how you can use it today and how we aim to transform the DA problem space.

Source : blog.polygon